Journal of Systems Engineering and Electronics ›› 2023, Vol. 34 ›› Issue (5): 1343-1358.doi: 10.23919/JSEE.2023.000113

-

收稿日期:2021-08-18出版日期:2023-10-18发布日期:2023-10-30

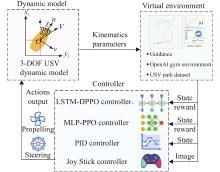

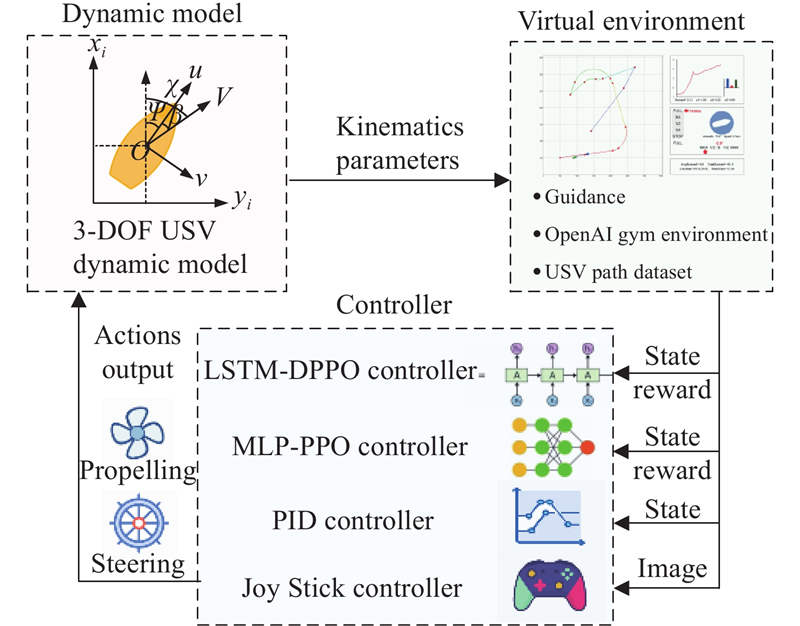

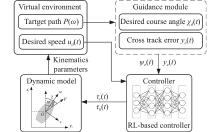

LSTM-DPPO based deep reinforcement learning controller for path following optimization of unmanned surface vehicle

Jiawei XIA1,2( ), Xufang ZHU3,*(

), Xufang ZHU3,*( ), Zhong LIU1(

), Zhong LIU1( ), Qingtao XIA1(

), Qingtao XIA1( )

)

- 1 School of Weaponry Engineering, Naval University of Engineering, Wuhan 430033, China

2 Qingdao Campus, Naval Aviation University, Qingdao 266041, China

3 School of Electronic Engineering, Naval University of Engineering, Wuhan 430033, China

-

Received:2021-08-18Online:2023-10-18Published:2023-10-30 -

Contact:Xufang ZHU E-mail:491650471@qq.com;1580284687@qq.com;liuzh531@163.com;xiaqing777@163.com -

About author:

XIA Jiawei was born in 1994. He received his B.S. and M.S. degrees from Naval University of Engineering (NUE), Wuhan, China, in 2016 and 2019, respectively. He is pursuing his Ph.D. degree in system engineering at NUE, Wuhan, China. His research interests include intelligent control of unmanned surface vehicles, deep reinforcement learning, and multi-agent control. E-mail: 491650471@qq.com

ZHU Xufang was born in 1978. She received her B.S., M.S., and Ph.D. degrees from Naval University of Engineering (NUE), Wuhan, China, in 1999, 2007, and 2017 respectively. She is a lecturer in the School of Electronic Engineering, NUE. Her research interests include target characteristics, information perception technology, and circuits and systems. E-mail: 1580284687@qq.com

LIU Zhong was born in 1963. He received his M.S. and Ph.D degrees from Naval University of Engineering (NUE) and Huazhong University of Science and Technology, Wuhan, China, in 1984 and 2004 respectively. He is currently a full professor at Shipborne Commseand & Control Department, NUE. His research interests include nonlinear control of mechanical systems with applcations to robotics and unmanned surface vehicles. E-mail: liuzh531@163.com

XIA Qingtao was born in 1978. He received his B.S. and M.S. degrees from Naval University of Engineering (NUE), Wuhan, China, in 2001 and 2012, respectively. He is a lecturer in the School of Weaponry Engineering, NUE. His research interests include unmanned system war and command and control system. E-mail: xiaqing777@163.com -

Supported by:This work was supported by the National Natural Science Foundation (61601491), the Natural Science Foundation of Hubei Province (2018CFC865), and the China Postdoctoral Science Foundation Funded Project (2016T45686)

引用本文

. [J]. Journal of Systems Engineering and Electronics, 2023, 34(5): 1343-1358.

Jiawei XIA, Xufang ZHU, Zhong LIU, Qingtao XIA. LSTM-DPPO based deep reinforcement learning controller for path following optimization of unmanned surface vehicle[J]. Journal of Systems Engineering and Electronics, 2023, 34(5): 1343-1358.

"

| Variable | Meaning | Range |

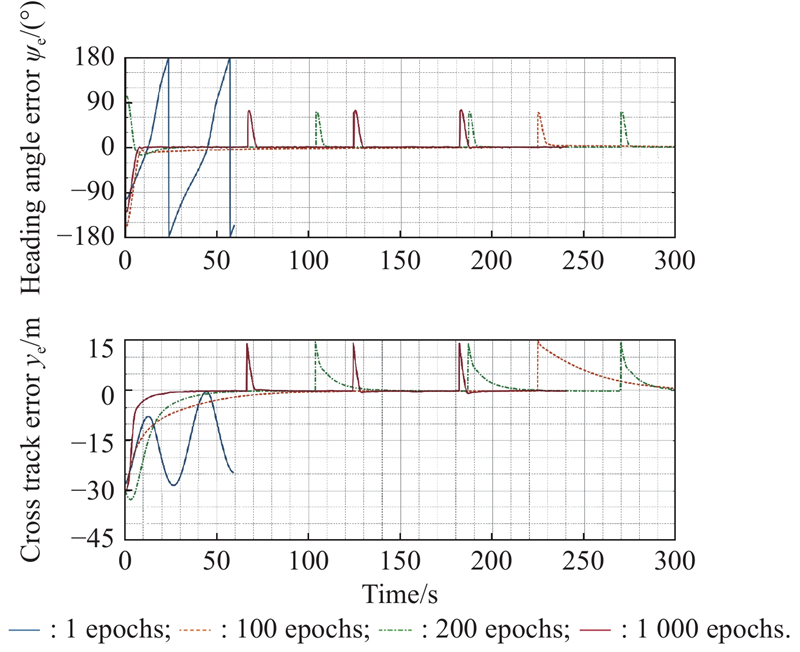

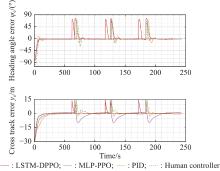

| | Heading angle error | [−180,180] |

| | Heading angle error derivative | |

| | Cross-track error | |

| | Cross-track error derivative | |

| | Speed error | [−20,20] |

| | Power output | [−1,1] |

| | Steering output | [−1,1] |

"

| Performance indices using standard path datasets | LSTM-DPPO 100 epochs | LSTM-DPPO 200 epochs | LSTM-DPPO 1000 epochs | MLP-PPO | PID | Joystick | |

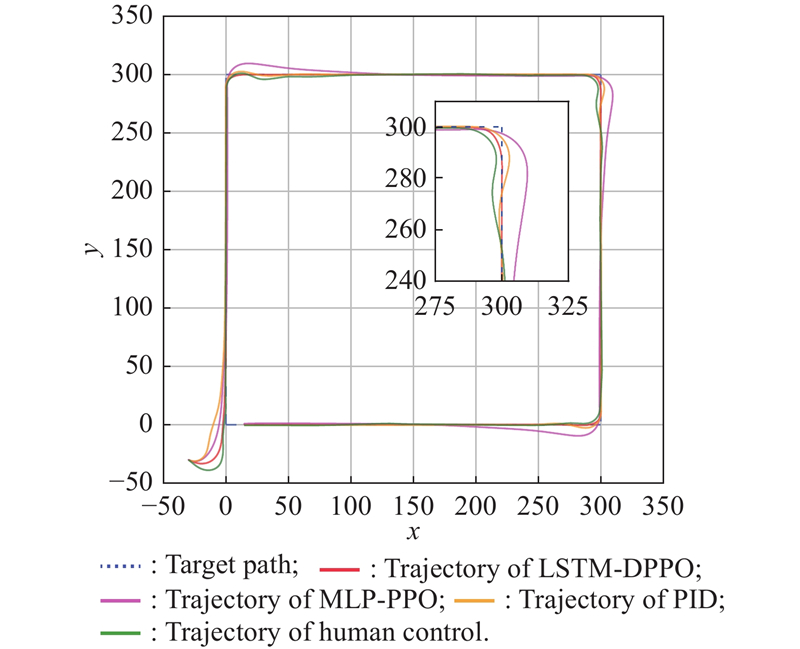

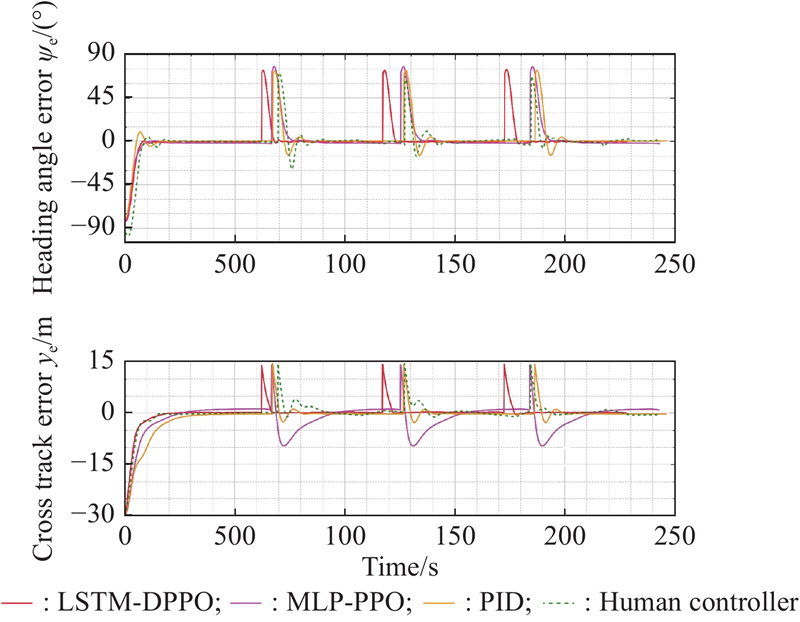

| Polygonal path | RMS H.A.E/(°) | 20.27 | 20.63 | 11.19 | 16.57 | 19.91 | 21.11 |

| RMS C.T.E/m | 3.78 | 3.38 | 3.12 | 3.80 | 4.49 | 5.26 | |

| Average reward | 0.59 | 0.65 | 0.78 | 0.70 | 0.72 | 0.65 | |

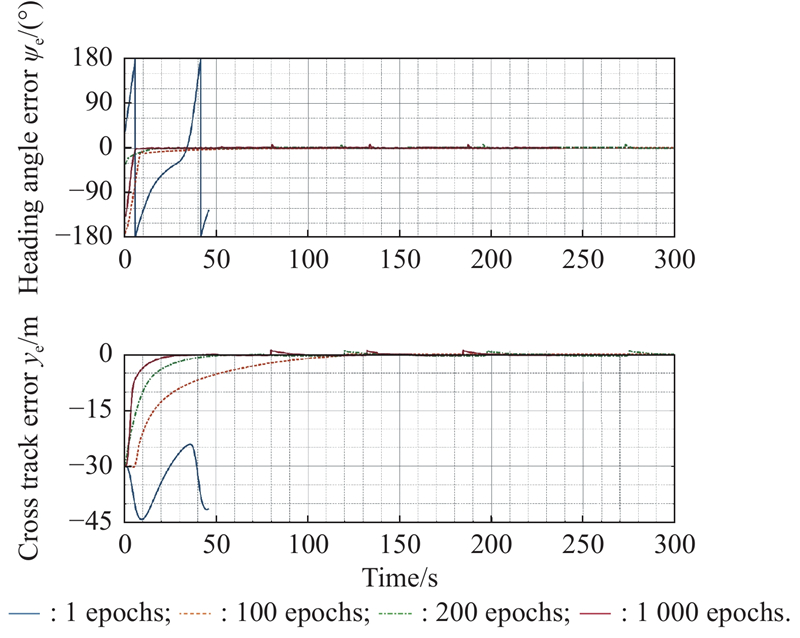

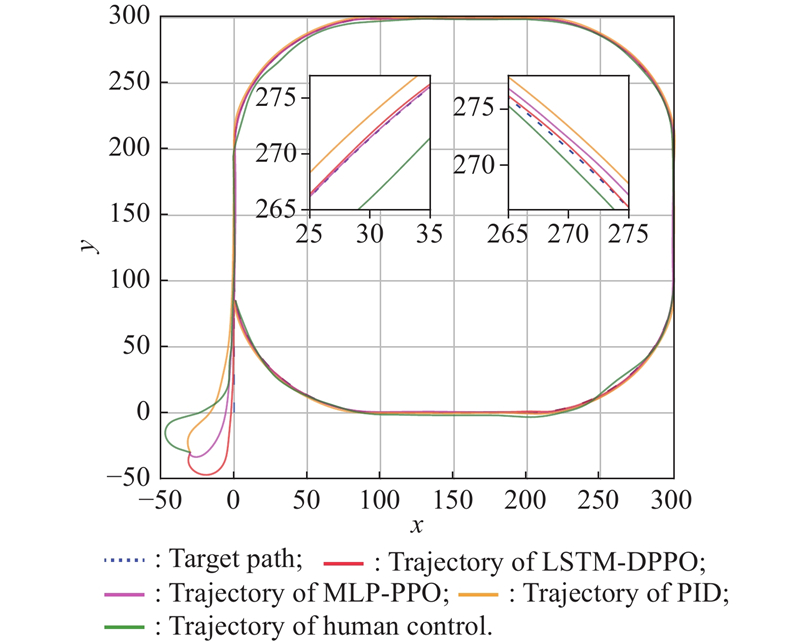

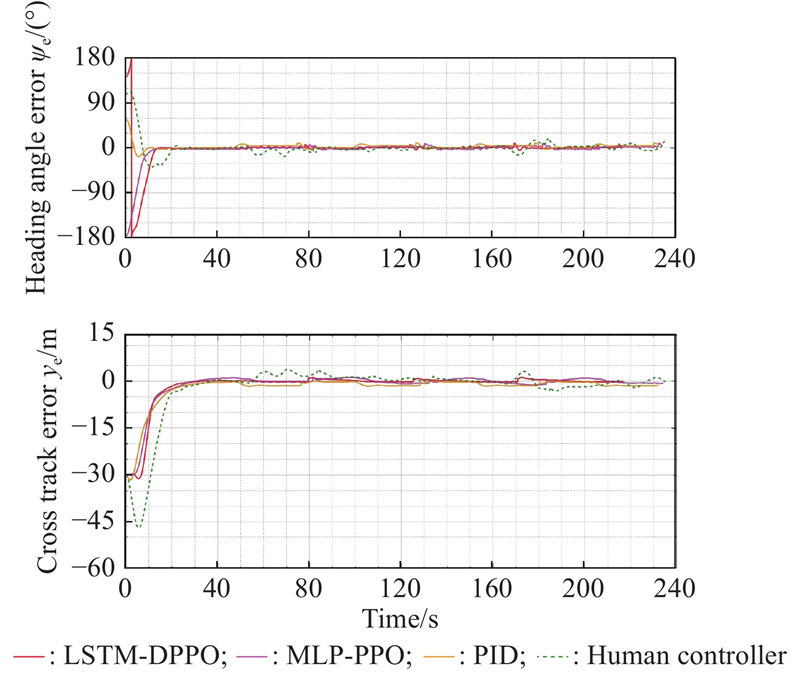

| Curved/mixed path | RMS H.A.E/(°) | 10.46 | 9.56 | 23.92 | 13.49 | 11.07 | 23.34 |

| RMS C.T.E/m | 3.35 | 3.81 | 3.55 | 3.78 | 3.90 | 5.28 | |

| Average reward | 0.62 | 0.66 | 0.73 | 0.73 | 0.74 | 0.69 | |

| All paths | RMS H.A.E/(°) | 17.46 | 17.47 | 14.83 | 14.91 | 17.39 | 21.56 |

| RMS C.T.E/m | 3.66 | 3.50 | 3.24 | 3.79 | 4.32 | 5.27 | |

| Average reward | 0.6 | 0.65 | 0.76 | 0.71 | 0.73 | 0.67 | |

"

| Performance indices for standard path datasets | one sample | 10 samples | 100 samples | 1000 samples | |

| Polygonal path | RMS H.A.E/(°) | 21.28 | 21.60 | 19.05 | 11.19 |

| RMS C.T.E/m | 4.85 | 4.37 | 4.36 | 3.12 | |

| Average reward | 0.69 | 0.654 | 0.74 | 0.78 | |

| Curved/mixed path | RMS H.A.E/(°) | 13.23 | 9.09 | 8.70 | 23.92 |

| RMS C.T.E/m | 4.90 | 3.80 | 2.95 | 3.55 | |

| Average reward | 0.69 | 0.71 | 0.77 | 0.73 | |

| All paths | RMS H.A.E/(°) | 18.98 | 18.02 | 16.09 | 14.83 |

| RMS C.T.E/m | 4.87 | 4.21 | 3.96 | 3.24 | |

| Average reward | 0.69 | 0.67 | 0.75 | 0.76 | |

| 1 |

LI Z F, LIU Z, ZHANG J Q Multi-under-actuated unmanned surface vessel coordinated path tracking. Sensors, 2020, 20 (3): 864.

doi: 10.3390/s20030864 |

| 2 | WANG N, KARIMI H R Successive waypoints tracking of an underactuated surface vehicle. IEEE Trans. on Industrial Informatics, 2019, 16 (2): 898- 908. |

| 3 |

HINZE M, SCHMIDT A, LEINE R I The direct method of Lyapunov for nonlinear dynamical systems with fractional damping. Nonlinear Dynamics, 2020, 102, 2017- 2037.

doi: 10.1007/s11071-020-05962-3 |

| 4 |

LYU C X, YU H S, CHI J R, et al A hybrid coordination controller for speed and heading control of underactuated unmanned surface vehicles system. Ocean Engineering, 2019, 176, 222- 230.

doi: 10.1016/j.oceaneng.2019.02.007 |

| 5 | ROSARIO R V C, CUNHA J P V S. Experimental variable structure trajectory tracking control of a surface vessel with a motion capture system. Proc. of the 43rd Annual Conference of the IEEE Industrial Electronics Society, 2017: 2864−2869. |

| 6 |

TEMEL T, ASHRAFIUON H Sliding-mode speed controller for tracking of underactuated surface vessels with extended Kalman filter. Electronics Letters, 2015, 51 (6): 467- 469.

doi: 10.1049/el.2014.4516 |

| 7 |

FARAMIN M, GOUDARZI R H, MALEKI A Track-keeping observer-based robust adaptive control of an unmanned surface vessel by applying a 4-DOF maneuvering model. Ocean Engineering, 2019, 183, 11- 23.

doi: 10.1016/j.oceaneng.2019.04.051 |

| 8 | MA Y L, HAN J D, HE Y Q Design of strait-line tracking controller of under-actuated USV based on back-stepping method and feedback compensation. Applied Mechanics and Materials, 2011, 48, 391- 396. |

| 9 | WANG C X, XIE S R, CHEN H Z, et al A decoupling controller by hierarchical backstepping method for straight-line tracking of unmanned surface vehicle. Systems Science & Control Engineering, 2019, 7 (1): 379- 388. |

| 10 |

HUANG H B, GONG M, ZHUANG Y F, et al A new guidance law for trajectory tracking of an underactuated unmanned surface vehicle with parameter perturbations. Ocean Engineering, 2019, 175, 217- 222.

doi: 10.1016/j.oceaneng.2019.02.042 |

| 11 |

WANG N, SUN Z, YIN J C, et al Fuzzy unknown observer-based robust adaptive path following control of underactuated surface vehicles subject to multiple unknowns. Ocean Engineering, 2019, 176, 57- 64.

doi: 10.1016/j.oceaneng.2019.02.017 |

| 12 |

SHARMA S K, SUTTON R, MOTWANI A, et al Non-linear control algorithms for an unmanned surface vehicle. Proceedings of the Institution of mechanical engineers, Part M: Journal of Engineering for the Maritime Environment, 2014, 228 (2): 146- 155.

doi: 10.1177/1475090213503630 |

| 13 | SUN X J, WANG G F, FAN Y S, et al A formation autonomous navigation system for unmanned surface vehicles with distributed control strategy. IEEE Trans. on Intelligent Transportation Systems, 2020, 22 (5): 2834- 2845. |

| 14 |

MARTINSEN A B, LEKKAS A M, GROS S, et al Reinforcement learning-based tracking control of usvs in varying operational conditions. Frontiers in Robotics and AI, 2020, 7, 32.

doi: 10.3389/frobt.2020.00032 |

| 15 |

SILVER D, SCHRITTWIESER J, SIMONYAN K, et al Mastering the game of go without human knowledge. Nature, 2017, 550 (7676): 354- 359.

doi: 10.1038/nature24270 |

| 16 |

VINYALS O, BABUSCHKIN I, CZARNECKI W M, et al Grandmaster level in StarCraft II using multi-agent reinforcement learning. Nature, 2019, 575 (7782): 350- 354.

doi: 10.1038/s41586-019-1724-z |

| 17 | WAN K F , LI B, GAO X G, et al A learning-based flexible autonomous motion control method for UAV in dynamic unknown environments. Journal of Systems Engineering and Electronics, 2021, 32 (6): 1490- 1508. |

| 18 | SONG W P , CHEN Z Q, SUN M W, et al Reinforcement learning based parameter optimization of active disturbance rejection control for autonomous underwater vehicle. Journal of Systems Engineering and Electronics, 2022, 33 (1): 170- 179. |

| 19 | MAGALHÃES J, DAMAS B, LOBO V Reinforcement learning: the application to autonomous biomimetic underwater vehicles control. IOP Conference Series: Earth and Environmental Science, 2018, 172 (1): 012019. |

| 20 | HUO Y J, LI Y P, FENG X S Model-free recurrent reinforcement learning for AUV horizontal control. IOP Conference Series: Materials Science and Engineering, 2018, 428 (1): 012063. |

| 21 |

XU H W, WANG N, ZHAO H, et al Deep reinforcement learning-based path planning of underactuated surface vessels. Cyber-Physical Systems, 2019, 5 (1): 1- 17.

doi: 10.1080/23335777.2018.1540018 |

| 22 | ZHAO Y J, QI X, MA Y, et al Path following optimization for an underactuated USV using smoothly-convergent deep reinforcement learning. IEEE Trans. on Intelligent Transportation Systems, 2020, 22 (10): 6208- 6220. |

| 23 | WANG N, ZHANG Y, AHN C K, et al Autonomous pilot of unmanned surface vehicles: bridging path planning and tracking. IEEE Trans. on Vehicular Technology, 2021, 71 (3): 2358- 2374. |

| 24 |

GONZALEZ G A, BARRAGAN A D, COLLADO G I, et al Adaptive dynamic programming and deep reinforcement learning for the control of an unmanned surface vehicle: experimental results. Control Engineering Practice, 2021, 111, 104807.

doi: 10.1016/j.conengprac.2021.104807 |

| 25 |

LEKKAS A M, FOSSEN T I Integral LOS path following for curved paths based on a monotone cubic Hermite spline parametrization. IEEE Trans. on Control Systems Technology, 2014, 22 (6): 2287- 2301.

doi: 10.1109/TCST.2014.2306774 |

| 26 |

NELSON D R, BARBER D B, MCLAIN T W, et al Vector field path following for miniature air vehicles. IEEE Trans. on Robotics, 2007, 23 (3): 519- 529.

doi: 10.1109/TRO.2007.898976 |

| 27 |

BIBULI M, BRUZZONE G, CACCIA M, et al Path-following algorithms and experiments for an unmanned surface vehicle. Journal of Field Robotics, 2009, 26 (8): 669- 688.

doi: 10.1002/rob.20303 |

| 28 |

FOSSEN T I, BREIVIK M, SKJETNE R Line-of-sight path following of underactuated marine craft. IFAC proceedings volumes, 2003, 36 (21): 211- 216.

doi: 10.1016/S1474-6670(17)37809-6 |

| 29 |

WOO J, YU C, KIM N Deep reinforcement learning-based controller for path following of an unmanned surface vehicle. Ocean Engineering, 2019, 183, 155- 166.

doi: 10.1016/j.oceaneng.2019.04.099 |

| 30 | SUTTON R S, BARTO A G. Reinforcement learning: an introduction. Cambridge: MIT Press, 2018. |

| 31 |

MNIH V, KAVUKCUOGLU K, SILVER D, et al Human-level control through deep reinforcement learning. Nature, 2015, 518 (7540): 529- 533.

doi: 10.1038/nature14236 |

| 32 | MNIH V, BADIA A P, MIRZA M, et al. Asynchronous methods for deep reinforcement learning. Proc. of the 33rd International Conference on International Conference on Machine Learning, 2016: 1928−1937. |

| 33 | LILLICRAP T P, HUNT J J, PRITZEL A, et al. Continuous control with deep reinforcement learning. https://arxiv.53yu.com/abs/1509.02971. |

| 34 | SCHULMAN J, WOLSKI F, DHARIWAL P, et al. Proximal policy optimization algorithms. https://arxiv.53yu.com/abs/1707.06347. |

| 35 | BØHN E, COATES E M, MOE S, et al. Deep reinforcement learning attitude control of fixed-wing uavs using proximal policy optimization. Proc. of the International Conference on Unmanned Aircraft Systems , 2019: 523−533. |

| 36 | SCHULMAN J, LEVINE S, ABBEEL P, et al. Trust region policy optimization. Proc. of the 32nd International Conference on International Conference on Machine Learning, 2015: 1889−1897. |

| 37 | SCHULMAN J, MORITZ P, LEVINE S, et al. High-dimensional continuous control using generalized advantage estimation. https://arxiv.53yu.com/abs/1506.02438. |

| 38 | HEESS N, TB D, SRIRAM S, et al. Emergence of locomotion behaviours in rich environments. https://arxiv.53yu.com/abs/1707.02286. |

| No related articles found! |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||